How to benchmark a Spring bean with JMH

Although the "M" of JMH stand for "Microbenchmark", you can use JMH to perform more macro benchmark.

In this article we want measure time taken by some methods implemented in a spring bean, so definitely not a micro benchmark.

Measuring higher level method has multiple role:

- have a rough idea of the time taken by an implementation

- compare solutions before the final commit

- avoid "debate" during code review on which solution would be faster

- Have an execution loop to ease profiling

Example application

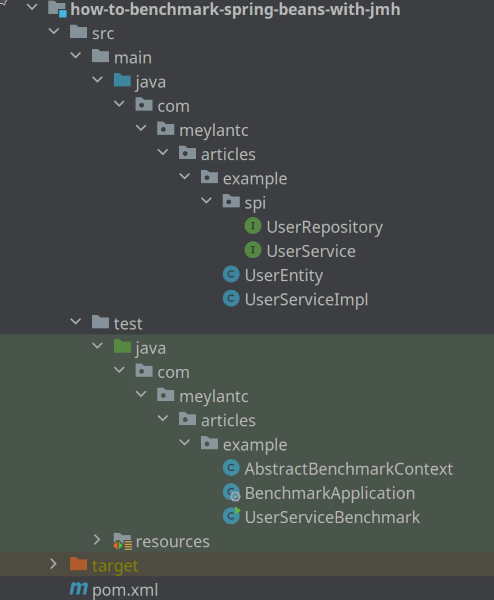

The structure of our test application is following:

In this example we want to measure our UserService, which perform insert into database. The example is not realistic but it shown how it is possible to measure data access layer methods.

For this we will use an actual database, using testcontainers.

Note that when you measure persistence layer we recommend to :

- adapt the configuration of the database to be similar to the one in production, like the memory configuration or replication to another instance, which have an impact on performance

- load data with realistic data, otherwise it is almost 100% sure behavior will be different than the production environment

- use a proxy like toxiproxy or use

tcunix utility in your container (if your os permit it, not like WSL)

Step 1 - add JMH dependencies

pom.xml

<properties>

<jmh.version>1.37</jmh.version>

</properties>

<dependencies>

<dependency>

<groupId>org.openjdk.jmh</groupId>

<artifactId>jmh-core</artifactId>

<version>${jmh.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.openjdk.jmh</groupId>

<artifactId>jmh-generator-annprocess</artifactId>

<version>${jmh.version}</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>${maven-compiler-plugin.version}</version>

<configuration>

<source>${java.version}</source>

<target>${java.version}</target>

<annotationProcessorPaths>

<path>

<groupId>org.openjdk.jmh</groupId>

<artifactId>jmh-generator-annprocess</artifactId>

<version>${jmh.version}</version>

</path>

</annotationProcessorPaths>

<annotationProcessors>org.openjdk.jmh.generators.BenchmarkProcessor</annotationProcessors>

</configuration>

</plugin>

</plugins>

</build>

Step 2 - Setup your Application

I recommend to create a dedicated @SpringBootApplication for benchmark in order to remove any unnecessary beans or configuration to speed up benchmark starting time.

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.ComponentScan;

@SpringBootApplication

// Here I suggest to use @ComponentScan and exclude any bean which are not used to run the benchmark,

// in order to make startup of benchmark faster

@ComponentScan(basePackages = "com.ringbufferlab.articles.example"

// excludeFilters = {

// @ComponentScan.Filter(type = FilterType.ASSIGNABLE_TYPE, value = BeanToExclude.class),

// @ComponentScan.Filter(type = FilterType.ASSIGNABLE_TYPE, value = ConfigurationToExclude.class)

// }

)

public class BenchmarkApplication {

}

Create a context class which can be shared between benchmarks.

In this class you can:

- Prepare your

testcontainers, if any - Prepare your

configurations properties, if any - Prepare

Application startup

import org.springframework.boot.SpringApplication;

import org.springframework.context.ConfigurableApplicationContext;

import org.testcontainers.containers.PostgreSQLContainer;

import org.testcontainers.utility.MountableFile;

import java.util.Properties;

public class AbstractBenchmarkContext {

private static PostgreSQLContainer<?> postgreSQLContainer;

protected static ConfigurableApplicationContext appContext;

static {

// This is how you can add custom postgresql configuration, useful to measure with different configuration or enable extension to help investigating performance issue

MountableFile postgresConf = MountableFile.forClasspathResource("postgres/postgres.conf");

MountableFile postgresSh = MountableFile.forClasspathResource("postgres/postgres.sh");

postgreSQLContainer = new PostgreSQLContainer<>("postgres:15.4")

.withDatabaseName("benchmark_db")

.withUsername("benchmark")

.withPassword("benchmark")

.withCopyToContainer(postgresSh, "/docker-entrypoint-initdb.d/postgres.sh")

.withCopyToContainer(postgresConf, "/postgres.conf")

.withCommand("postgres");

postgreSQLContainer.withReuse(true).start();

}

protected static void setup(Class<?> applicationClass) {

SpringApplication application = new SpringApplication(applicationClass/*, any extra configuration class to import */);

Properties properties = new Properties();

properties.put("spring.datasource.url", postgreSQLContainer.getJdbcUrl());

properties.put("spring.datasource.username", postgreSQLContainer.getUsername());

properties.put("spring.datasource.password", postgreSQLContainer.getPassword());

application.setAdditionalProfiles("benchmark");

application.setDefaultProperties(properties);

appContext = application.run();

}

}

Step 3 - write the benchmark

import com.ringbufferlab.articles.example.spi.UserService;

import org.openjdk.jmh.annotations.Benchmark;

import org.openjdk.jmh.annotations.BenchmarkMode;

import org.openjdk.jmh.annotations.Mode;

import org.openjdk.jmh.annotations.OutputTimeUnit;

import org.openjdk.jmh.annotations.Scope;

import org.openjdk.jmh.annotations.Setup;

import org.openjdk.jmh.annotations.State;

import org.openjdk.jmh.runner.Runner;

import org.openjdk.jmh.runner.RunnerException;

import org.openjdk.jmh.runner.options.Options;

import org.openjdk.jmh.runner.options.OptionsBuilder;

import org.openjdk.jmh.runner.options.TimeValue;

import java.time.LocalDate;

import java.util.UUID;

import java.util.concurrent.ThreadLocalRandom;

import java.util.concurrent.TimeUnit;

import static com.ringbufferlab.articles.example.UserServiceBenchmark.BenchmarkContext.endExclusive;

import static com.ringbufferlab.articles.example.UserServiceBenchmark.BenchmarkContext.startInclusive;

public class UserServiceBenchmark {

@State(Scope.Benchmark)

public static class BenchmarkContext extends AbstractBenchmarkContext {

static long startInclusive = LocalDate.of(1980, 01, 01).toEpochDay();

static long endExclusive = LocalDate.now().toEpochDay();

UserService userService;

static {

// This will startup springboot application

super.setup(BenchmarkApplication.class);

}

@Setup

public void setup() {

// Getting the bean we want to test

userService = appContext.getBean(UserService.class);

}

}

@Benchmark

@BenchmarkMode(Mode.Throughput)

@OutputTimeUnit(TimeUnit.SECONDS)

public void insert(BenchmarkContext context) {

context.userService.insert(new UserEntity(UUID.randomUUID().toString(), "firstname", "lastname", UUID.randomUUID() +"@exampl.com", randomDate()));

}

public static LocalDate randomDate() {

long startEpochDay = startInclusive;

long endEpochDay = endExclusive;

long randomDay = ThreadLocalRandom

.current()

.nextLong(startEpochDay, endEpochDay);

return LocalDate.ofEpochDay(randomDay);

}

public static void main(String[] args) throws RunnerException {

// Configuration JMH

Options opt = new OptionsBuilder()

.include(UserServiceBenchmark.class.getSimpleName())

.forks(1)

.warmupIterations(1)

.warmupTime(new TimeValue(10, TimeUnit.SECONDS))

.threads(1)

.measurementIterations(2)

.measurementTime(new TimeValue(20, TimeUnit.SECONDS))

.build();

new Runner(opt).run();

}

}

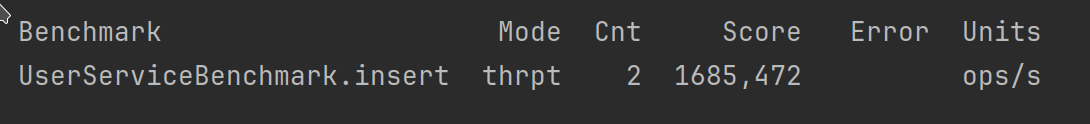

"Et voilà", :

Conclusion

Java and its ecosystem make this kind of tests very easy, would be idiot to not use it.

It is better to spend 2h to validate a hypothesis rather than cause a performance issue to your customers. Don't you think so?

Depending on what you measure, results won't reflect the reality.

Indeed, if you measure a part of your application calling external service (like or exemple) and if it is locally deployed, you will probably forget to simulate network latency, or bandwidth consume by neighbors services, like in production.

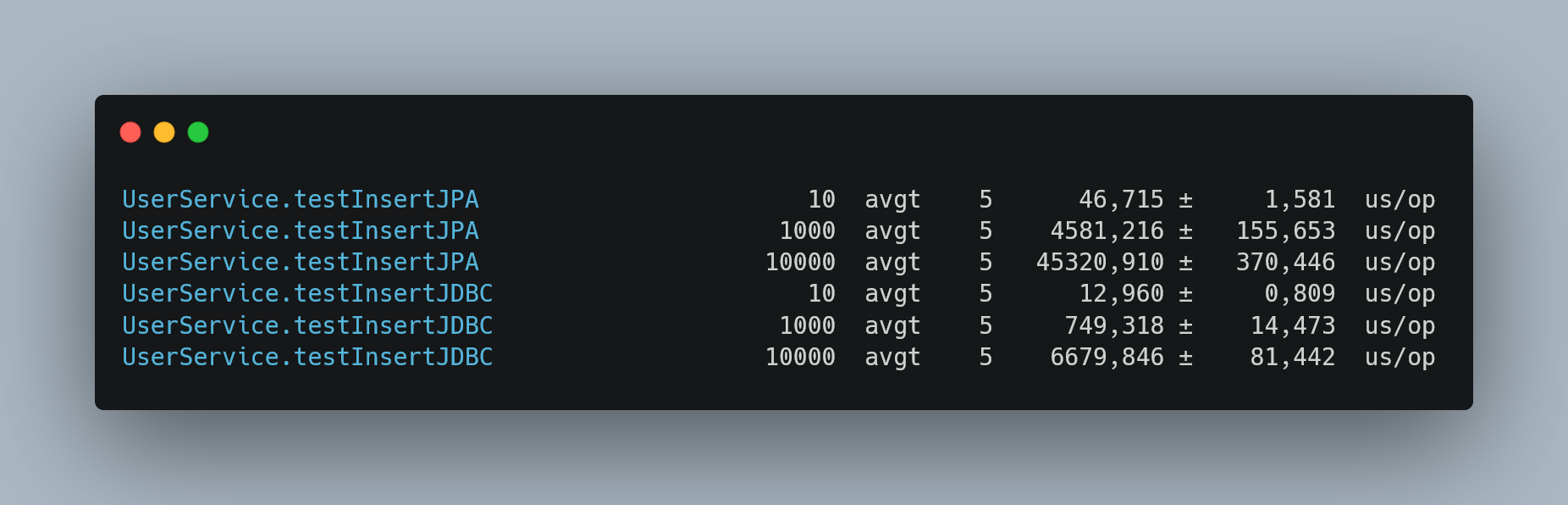

The goal for the method described in this article is to validate hypothesis, and to have a rough idea of execution time, to compare multiple implementations.